DStep is a tool for automatically generating D bindings for C and Objective-C libraries. This is implemented by processing C or Objective-C header files and outputting D modules. DStep uses the Clang compiler as a library (libclang) to process the header files.

Background

The first version of DStep was released on the 7th of July, 2012. There have been four subsequent releases, the last of which was on the 16th of January, 2016. Quite a lot has happened in the D world and with DStep since then.

After the release of DStep 0.2.1 in January 2016, there wasn’t much progress on DStep. I had a limited amount of time and chose to spend it on other projects. Fortunately, in 2016, DStep got picked as one of four D-related projects for Google Summer of Code (GSoC). The student who chose to work on DStep was Wojciech Szęszoł. He did a tremendous amount of work and pushed DStep forward by years compared to the time it would have taken me. In fact, I was often a blocker because I couldn’t keep up with reviewing all the changes he made.

New Release

The latest release of DStep contains a huge number of new features and bug fixes. A lot of the new features add support for translating C preprocessor macros in various forms. DStep also gained support for one more platform: Windows. Here follow some of the new features available in DStep 1.0.0:

Support for Simple Defines

This feature adds support for translating a simple form of #define to a manifest constant in D. Example:

#define FOO 1

The above C code is translated to the following D code:

enum FOO = 1;

DStep will try to translate the C code so that the D code looks as much as possible like the original C code. If a #define contains an expression instead of a single literal, DStep will try to preserve the original expression:

#define FOO 1 + 3

Instead of translating this to a manifest constant with the value of 4 (which would be semantically correct), DStep will preserve the original expression and translate it to:

enum FOO = 1 + 3;

This also goes for other types of literals, like hexadecimal literals:

#define FOO 0x1

Here DStep will preserve the hexadecimal literal and translate it to:

enum FOO = 0x1;

Function-Like Macros

DStep is now able to translate function-like macros. This is a pretty advanced feature that requires a small parser for the macros. DStep uses libclang to tokenize the macros and the parses them to be able to do the proper translations. The most basic example looks like:

#define FOO() 0 + 1

The above macro will translate to the following D code:

extern (D) int FOO()

{

return 0 + 1;

}

Although not shown here (to minimize the examples), DStep will output extern (C): at the top of each file. Therefore, for macros translated to functions, DStep will add extern (D) to give the functions D linkage and mangling.

Here’s an example of a C macro containing parameters:

#define FOO(a, b) a + b

Unfortunately, in C, a and b can be basically anything. D doesn’t have an exact corresponding feature. DStep will translate this as accurately as

possible by outputting a templated function:

extern (D) auto FOO(T0, T1)(auto ref T0 a, auto ref T1 b)

{

return a + b;

}

The assumption in this translation is that a and b will be a value of some kind of type. They can either be of the same type or of different types. To

avoid copying any of the values, ref parameters are used. Since an rvalue cannot be passed to a ref parameter, auto ref is used instead to properly handle both rvalues and lvalues.

More advanced expressions are supported as well:

#define BAR 4

#define FOO(a, b) a + 3 + (b + BAR) - sizeof(b)

In the above example there’s a combination of parameters, literals, parenthesized expression, usages of other macros, and built-in operators. DStep handles all those and translates it to:

enum BAR = 4;

extern (D) auto FOO(T0, T1)(auto ref T0 a, auto ref T1 b)

{

return a + 3 + (b + BAR) - b.sizeof;

}

Again, the expression is preserved as closely as possible to the original source code. The parentheses, the reference to the BAR macro, all are preserved.

Token Concatenation

This feature adds support for translating the token concatenation, or token pasting, operator to a D string concatenation:

#define CONCAT(prefix, name) prefix ## name

The above function-like macro concatenates the two given tokens. DStep translates that to a function that converts the arguments to strings and concatenates the two resulting strings. This can then be used together with the string mixin statement to give the same behavior as in C.

extern (D) string CONCAT(T0, T1)(auto ref T0 prefix, auto ref T1 name)

{

import std.conv : to;

return to!string(prefix) ~ to!string(name);

}

Another example is parameters combined with tokens:

#define CONCAT(prefix) prefix ## name

This translates similarly to the previous example, but since name is not a parameter this will be translated to a string literal:

extern (D) string CONCAT(T)(auto ref T prefix)

{

import std.conv : to;

return to!string(prefix) ~ "name";

}

Preprocessor Constants in Array Sizes

DStep will now preserve preprocessor constants for the size of arrays:

#define Foo 3

int a[Foo];

In previous versions of DStep the translation would just output the size of the array a as 3. This would be semantically accurate but the generated source

code would look less like the original C source code. Now DStep is able to translate preprocessor constants and can, therefore, use the preprocessor constant as the size of the array:

enum Foo = 3;

extern __gshared int[Foo] a;

In the above example, the manifest constant Foo is used as the size of a instead of a plain 3. This more closely matches the original C source code.

In previous versions of DStep comments were completely stripped out. With this release DStep is able to preserve comments in the D code from the original C code:

// This comment describes this whole file

// Documentation for the symbol `foo`

void foo();

/* Loose comment */ /* Loose comment */

/*

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

*/ /* Loose comment */

int a; // this is `a`

In the above example there are three types of comments:

- A header comment for the whole file

- A preceding comment for the symbol

foo

- Loose comments not belonging to any symbol

- A trailing comment for the symbol

a

All of these comments are now properly preserved:

// This comment describes this whole file

extern (C):

// Documentation for the symbol `foo`

void foo ();

/* Loose comment */ /* Loose comment */

/*

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

*/ /* Loose comment */

extern __gshared int a; // this is `a`

In the above example, notice how the header comment is placed above the extern (C): line. If a module declaration is output in the D file (when the --package flag is used), the header comment will be placed above that as well:

// This comment describes this whole file

module bar.foo;

extern (C):

// Documentation for the symbol `foo`

void foo ();

/* Loose comment */ /* Loose comment */

/*

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

Multi-line loose comment.

*/ /* Loose comment */

extern __gshared int a; // this is `a`

It’s also possible to disable the preservation of comments using the --comments=false flag.

Package Prefix

To better help organize bindings, DStep supports the

--package <name.of.package> flag. When this flag is enabled DStep will put all the translated modules in the <name> package. Note, this will only add the package prefix to the module declaration of the translated file. It will not place the output file in a directory corresponding to the package.

Removing Excessive Newlines

DStep will now remove excessive newlines but still preserve spacing for the original C code. This is best illustrated with an example:

int a;

int b;

In the above example there are two newlines between the declarations of a and b. DStep will remove the excessive newline and only output one to still preserve the spacing:

extern __gshared int a;

extern __gshared int b;

But if there is no spacing between the declarations, that is respected as well:

int a;

int b;

In the above example there is no newline between the declarations and DStep will preserve that:

extern __gshared int a;

extern __gshared int b;

Preserving Order of Declarations

In previous versions of DStep the declarations in the translated D code would follow a certain order defined by DStep, aliases first, then constants, then types and last functions. With this release, DStep will now preserve the order of the declarations of the original C code:

void bar();

struct Foo

{

int a;

};

Previous versions would translate the above to:

struct Foo

{

int a;

}

void bar ();

with the struct first and then the function declaration. With this release, the order is preserved:

void bar ();

struct Foo

{

int a;

}

Previous versions of DStep only allowed a single header file as input. With this release, multiple files can be passed to DStep at once. Each input file will produce one D source file as input. To pass multiple input files to DStep, just pass the filenames when invoking DStep.

$ dstep foo.h bar.h

Running the above command will produce two D source files: foo.d and bar.d.

If multiple input files and the -o flag are given, the -o flag specifies the output directory where the D source files will be placed. When multiple input

files are given it’s not possible to specify the names of the D source files.

$ dstep foo.h bar.h -o foobar

$ ls foobar

bar.d foo.d

The above command will place the two D source files in the directory foobar.

--reduce-aliases Flag

Normally when DStep translates a header file to a D module it will reduce aliases if possible. DStep contains a set of common typedefs that can be reduced to native D types. That means that code like this:

#include ;

int32_t a = 3;

Will be translated to the following D code:

extern __gshared int a;

In this release of DStep, there’s a new flag, --reduce-aliases. This flag allows the reduce aliases feature to be enabled or disabled. By default it’s enabled, but can be disabled by invoking DStep with the following command: dstep --reduce-aliases=false. When this feature is disabled, it will translate the above example to the following D code:

import core.stdc.stdint;

extern __gshared int32_t a;

It will keep the name int32_t as the type of the variable declaration and add the import for the module that contains the declaration of int32_t.

--alias-enum-members Flag

In C, enum members are accessible directly in the global scope. Example:

enum Foo

{

foo,

bar

};

enum Foo a = foo;

In D however, enum members need to be qualified with the enum name. The correct translation of the above would be:

enum Foo

{

foo = 0,

bar = 1

}

Foo a = Foo.foo;

In this release of DStep a new flag has been added, --alias-enum-members, that enables the generation of aliases to enum members at module scope. This will allow keeping the translation more closely to the original C code:

enum Foo

{

foo = 0,

bar = 1

}

alias foo = Foo.foo;

alias bar = Foo.bar;

By default this feature is not enabled for the translated D code to more closely follow the D conventions.

--translate-macros Flag

In this release, DStep can now translate several kinds of C macros to their equivalent in D. This might not always be desirable because the translations are not bulletproof. Therefore, there’s a new flag, --translate-macros, which will enable or disable the translation of macros. By default translation of macros is enabled.

libclang Bindings

DStep uses Clang as a library to process the C code. There two main ways of using Clang as a library. One is to use the C++ APIs directly. This will give full access to what Clang can do. The problem with this API is that is not a stable API. It’s also a C++ API and when DStep was first implemented, the C++ integration in D was quite lacking. One of my requirements when implementing DStep was to implement it in D. Therefore, the natural choice was to use the C API, called “libclang”, which is also provided. This library is the main interface intended to be used by editors and IDEs that want to leverage Clang as a library. It’s also recommended to use libclang when accessing Clang from a language other than C++—D in my case.

Since libclang is a C library only exposing C header files, I needed bindings to be able to use from it within D. Up until now these bindings were hand made. Fortunately, these headers are the most forgiving I have ever seen when it comes to translating into D. Only a single header file was needed containing hardly any macros at all. It was quite a quick job of translating these headers by using search-and-replace.

With this release of DStep, since DStep has improved so much, the bindings are now self-hosted. That is, DStep has been used to generate the bindings. In addition to that, generation of the bindings has now been added to the test suite to make sure it doesn’t break.

Support for Windows

DStep was originally developed on macOS since that is my main development platform. Thanks to the Posix standard it was easy to port to Linux as well. The first release of DStep was available for both macOS and Linux. Back in 2011 when the development of DStep first started, Clang did not support Windows. There seems to have been some support for MinGW, but that was not a target supported by DMD. The LLVM and Clang team has made huge progress since then and in 2016 when DStep got picked as a GSoC project, support for Windows was available, targeting compatibility with the Visual Studio compiler.

In this release, thanks to Wojciech Szęszoł, DStep is now available on Windows. Due to Clang being compatible with Visual Studio, DStep needs to be built to use the same object format. When compiling with DMD, that means compiling for 64-bit (via the -m64 flag) or using the -m32mscoff flag when compiling for 32 bit. The Dub package automatically takes care of this.

Continuous Integration/Deployment

In the area of CI/CD quite a few things have happened. Originally the test suite of DStep was implemented using Cucumber and Ruby. These tests were a form of end-to-end test and failed to take advantage of the reasons to use Cucumber in the first place. These tests have now been replaced with a combination of unit tests and end-to-end tests, all implemented in D.

LDC

LDC has been added as a supported compiler. That means that DStep is compiled with LDC in addition to DMD as part of the CI pipelines. Every commit and every pull request is now tested with LDC as well.

Upgrade of Compilers

Both DMD and LDC have been upgraded to their latest versions. In addition, beta and nightly releases are being tested in the CI pipelines. A scheduled job has been added to the CI pipelines as well, which will run once every day to make sure new releases of the compilers won’t break DStep even if no changes have been made to DStep. This also means that only the latest versions of LDC and DMD are supported for building DStep.

Testing Windows using AppVeyor

Since DStep now supports Windows as an additional platform a new CI pipeline has been added in the form of AppVeyor. This is a CI service that provides Windows as a platform to run builds on. This build run compiles DStep both using DMD and LDC and it also builds both 32-bit and 64-bit versions.

Complex Floating-Point Types

Another feature that is new in this version of DStep is that complex floating-point types are now supported. There are three complex types that are supported:

float _Complex, double _Complex and long double _Complex.

float _Complex a;

double _Complex b;

long double _Complex c;

The above code snippet in C is translated to the following D code:

extern __gshared cfloat a;

extern __gshared cdouble b;

extern __gshared creal c;

New Alias Syntax

Typedefs in C header files are translated to alias declarations in the D code. Up until this release they used the old alias syntax: alias oldName newName. Since the previous release of DStep the D language has improved and gained new features. One of them is a new (now considered the standard) alias syntax: alias newName = oldName. It’s easier to follow which name is the alias and which is the original when it’s using a more familiar syntax similar to variable declarations. Here’s an example of how the C code is translated into D code with the new alias syntax:

typedef int foo;

The above C code is translated to the following D code:

alias foo = int;

Custom Global Attributes

By default DStep doesn’t add any attributes like @nogc or nothrow to the translated code. In this release of DStep, support for attributes has been added. Custom global attributes can be enabled with the --global-attribute flag. For a C header file with the following content:

int a;

And invoking DStep with the following command:

$ dstep foo.h --global-attribute @nogc --global-attribute nothrow

Will output the following D code:

@nogc:

nothrow:

extern __gshared int a;

Rename Enums

Unlike in D, enums in C don’t create a new scope for their members, even if a name is given to the enum. Example:

enum

{

a,

b

};

enum Foo

{

c,

d

};

int e = a;

int f = c;

In the above example it’s possible to access the enum members from both of the enums without qualifying the type. In D, this is not the case for named enums. They require the qualifying the enum member with the type name:

enum

{

a,

b

}

enum Foo

{

c,

d

}

int e = a; // ok since the first enum is anonymous

int f = Foo.c; // need to qualifying the enum member with the type name

To reduce the risk of symbol conflict it’s quite common for C libraries to prefix enum members with the name of the type:

enum Foo

{

FooC,

FooD

};

While the following is perfectly fine in D as well, it gets a bit redundant and verbose to have to specify Foo twice:

enum Foo

{

FooC,

FooD

}

int c = Foo.FooC;

int d = Foo.FooD;

For this reason DStep now supports a new flag, --rename-enum-members, which when enabled will try to remove any prefix of the enum member names. Given the

following C header file:

enum Foo

{

FooA,

FooB

};

And running DStep as follows:

$ dstep foo.h --rename-enum-members

It will produce the following D code:

enum Foo

{

a = 0,

b = 1

}

DStep identified the Foo prefix and removed it from the enum member names. It also converted the names to lowercase to better match the standard D naming

conventions.

By default this feature is not enabled to more closely match the original C code.

Normalize Modules

When the --package flag is specified DStep will add a module declaration to all D modules. By default, it will use the name of the input file as the name of the module. In the C world there’s no direct file-naming convention. Some libraries will use all lowercase letters, some will use snake case, some will use camel case, some will use Pascal case, and so on.

The standard D naming convention for modules (and therefore files) is to use only lowercase letters and underscores, i.e snake case. To help with following this convention DStep now supports the new flag --normalize-modules. When this flag is enabled (and the --package flag is used) DStep will try to convert the name of the input file to a name matching the D conventions.

Given a C header file named Foo.h and only invoking DStep with the --package flag:

$ dstep Foo.h --package bar

DStep will produce the following D code:

module bar.Foo;

When the --normalize-modules flag is used as well:

$ dstep Foo.h --package bar --normalize-modules

DStep will output the following D code:

module bar.foo;

Note that Foo has been converted to foo.

Another example with a file using the Pascal naming convention:

$ dstep NSString.h --package bar --normalize-modules

And the result:

module bar.ns_string;

By default this feature is not enabled to more closely match the original C code.

Bit Fields

Another new feature that has been added in this release of DStep is support for bit fields. The bit field is a built-in language construct in C, but there’s no language support for it in D. Fortunately, with the help of D’s metaprogramming capabilities, the bit field has been implemented as a library construct and is available in the standard library [3]. The library construct will generate getters and setters that perform the same bit manipulation that

the C compiler would have generated.

The following snippet in C:

struct Foo

{

unsigned int a : 1;

unsigned int b : 2;

unsigned int c : 5;

};

Is translated to D:

struct Foo

{

import std.bitmanip : bitfields;

mixin(bitfields!(

uint, "a", 1,

uint, "b", 2,

uint, "c", 5));

}

The D translation makes use of the bitfields template from the standard library. It’s automatically imported, directly inside the struct, to minimize the scope of where the symbol is available.

In his day job, Jacob Carlborg is a DevOps engineer for Derivco Sweden, but he’s been using D on his own time since 2006. He is the maintainer of numerous open source projects, including DStep, a utility that generates D bindings from C and Objective-C headers, DWT, a port of the Java GUI library SWT, and DVM, the topic of another post on this blog. He implemented native Thread Local Storage support for DMD on OS X and contributed, along with Michel Fortin, to the integration of Objective-C in D.

Nearly all non-trivial programs allocate and manage memory. Getting it right is becoming increasingly important, as programs get ever more complex and mistakes get ever more costly. The usual problems are:

Nearly all non-trivial programs allocate and manage memory. Getting it right is becoming increasingly important, as programs get ever more complex and mistakes get ever more costly. The usual problems are: Last year,

Last year,  Fuzzing, or fuzz testing, is a powerful method to find hidden bugs in your application. The basic idea is to present random input to your application and monitor how it behaves. If it crashes or shows some other unusual behavior then you have found a bug.

Fuzzing, or fuzz testing, is a powerful method to find hidden bugs in your application. The basic idea is to present random input to your application and monitor how it behaves. If it crashes or shows some other unusual behavior then you have found a bug.

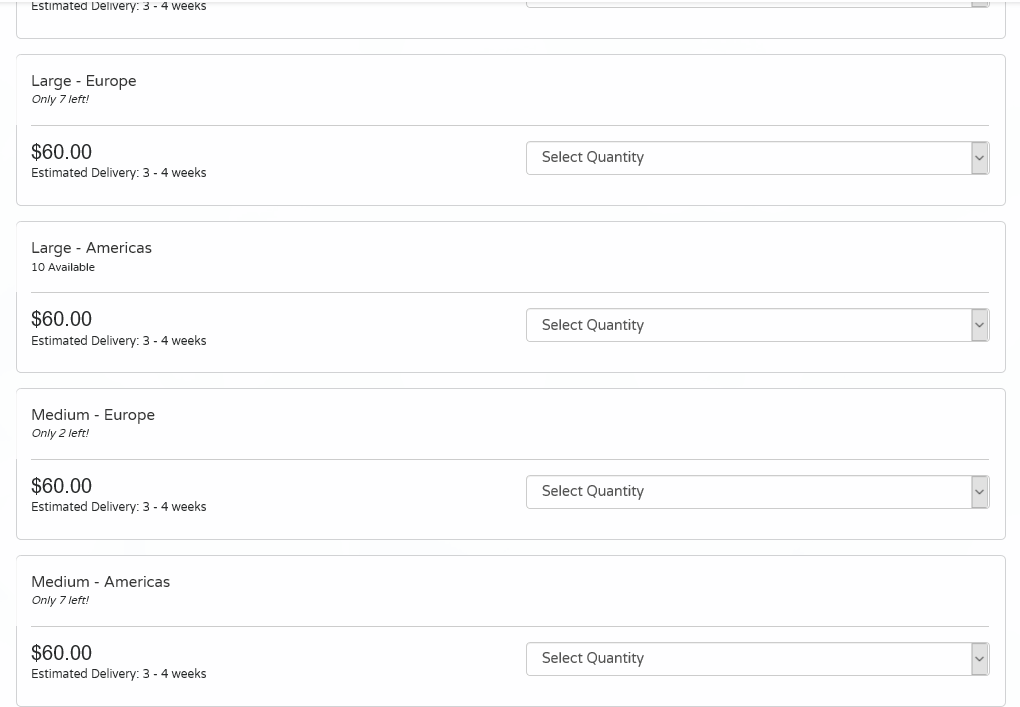

This campaign will help us minimize shipping costs and keep track in real time of the number of shirts remaining. Once the shirts are gone, the campaign is closed. So please, when you make your donation, help us out by selecting the region in which you live if there are still shirts available.

This campaign will help us minimize shipping costs and keep track in real time of the number of shirts remaining. Once the shirts are gone, the campaign is closed. So please, when you make your donation, help us out by selecting the region in which you live if there are still shirts available.

In late November of last year, Laeeth Isharc of

In late November of last year, Laeeth Isharc of