In late November of last year, Laeeth Isharc of Symmetry Investments expressed interest in hosting DConf 2019 in London. On a personal note, I had been looking for an excuse to get back to London since my brief visit at the end of the first Berlin DConf in 2016, so as my inbox filled with emails discussing possible venues, my excitement started to build. At one point, the Royal Institution was among the list of candidates.

In late November of last year, Laeeth Isharc of Symmetry Investments expressed interest in hosting DConf 2019 in London. On a personal note, I had been looking for an excuse to get back to London since my brief visit at the end of the first Berlin DConf in 2016, so as my inbox filled with emails discussing possible venues, my excitement started to build. At one point, the Royal Institution was among the list of candidates.

I couldn’t make an announcement yet as nothing was certain, but I did start teasing it on Twitter and here on the blog. At our first D Language Foundation quarterly meeting on December 1, there was unanimous agreement that London was the place to be. As the days passed and it seemed to be a near certainty, I was eager to make the announcement, but near certainty is not certainty. I had to wait until Symmetry had selected a venue. That news reached my inbox on December 21. I announced it on the blog the next day.

After that, it was time to get into the details.

Planning a DConf

Past editions of DConf were organized either by hosts with employees who regularly organize conferences as part of their job descriptions, or, in the case of DConf 2018, an event planner hired by the host. This year there were no event planners and no dedicated conference organizers. It was a very different experience compared to my first peek behind the DConf curtain last year. Most of the details were hashed out in numerous emails and phone calls with Belinda Liao. Though we can thank Laeeth and Symmetry for making DConf 2019 happen, we owe a big thanks to Belinda for making it work.

I first became acquainted with Belinda–who Laeeth introduced as his “chief of staff in London”, who Symmetry’s tech team affectionately refer to as “the official nag”, and whose official title is Business Manager for Technology at Symmetry–during last year’s Symmetry Autumn of Code. Throughout the planning for DConf, she was the one doing all of the legwork. She also made sure we covered all of the bases, querying me for our requirements, pointing out anything I overlooked, and bringing new ideas.

The venue told us they would handle the live stream, but we also wanted a separate solution for recording and producing the individual talk videos. Belinda hired Stage Engage, who sent a single technician, Rowan While, to get the job done. He set up multiple cameras and sat at his primary camera in a back corner for the entirety of the three days of talks. He and his colleagues did an excellent job and three weeks after DConf the link to download the videos was sitting in my inbox. They’re all available on our YouTube channel and are accessible, along with the slides for each talk, through the DConf 2019 schedule.

During the talk submission period, Ethan Watson reached out to tell us he could submit a talk, help us in reviewing drafts of the speakers’ slides, or volunteer to be the emcee. Andrei suggested he do all three. So he did!

This year was the first time we asked the speakers to submit drafts of their slides. Last year, Andrei participated in a conference where the speakers were required to present their talks via Skype for review prior to the conference. He suggested to me at the end of DConf 2018 that we might want to consider that this year. When Ethan came onboard, he suggested instead simply reviewing drafts of the slides, which is standard procedure at the Game Developer’s Conference where he had previously presented. So we set up a deadline for the speakers to send us their drafts. Ethan reviewed them and provided feedback.

Planning the peripherals

When I first heard we might be going to London, I wanted to find some places to see other than the well-known tourist spots. On my first visit, I’d only had a day to be a tourist. This time, my wife was coming along and we were making a two-week trip out of it. So I hit YouTube to search for some video guides. That’s where I found Joolz Guides.

Julian McDonnell uses the channel to post London history walks and travel films. A filmmaker and actor, he also makes himself available for private guided walks through his web site. The idea came to me later than it should have, but in late February I contacted Julian to see if he would be available for a couple of pre-DConf walks. He doesn’t generally work with large groups, though he told me he had scheduled a pub crawl with 18 employees of a company. So we set 18 as the maximum size of a group, worked out a payment structure based on the total number of people, and I got Andrei’s approval for the foundation to cover the cost.

Around the same time, I got in touch with a pub near the venue. Finding a nightly gathering spot was a concern from the beginning. At past conferences, it was either the “official” hotel or, last year, the venue itself. There were several hotel options around the venue, many of them rather pricey. The budget hotels didn’t strike me as places where we could be holding our nightly “BeerConf”. I wanted to avoid the situation that happened in 2017 in Berlin when the hotel staff kicked us out of the lobby and relegated us to a back room. Belinda offered suggestions and I also sought advice from Russel Winder. Ultimately, I stumbled upon the Prince Arthur Pub while poking around Google Maps one night.

The pub has a second-floor space available for private hire. Getting it booked for three nights was a simple process. It also presented an opportunity for sponsorship. Ali Çehreli had been hoping to get his employer, Mercedes Benz Research and Development North America, to sponsor us in one form or another. We had been looking at potential swag, but now that we had the pub, he got approval for the company to cover the booking fee and a couple of rounds of drinks for each person who joined us each night.

Later on, Symmetry rented a different space nearby for the third night, including beer and food. So we had a cozy neighborhood pub for the first two nights of BeerConf and a more upscale bar for the third. Two different atmospheres that both allowed us all to have a good time.

The tours

The first of the two tours we booked with Julian took place two days before the conference, starting outside the Ritz at Green Park station (which, as it turns out, is right up the street from Symmetry) and ended up at the Strand near Charring Cross. The next day we met at Temple station and went through Temple (the legal district named for Temple Church, which was built by the Knights Templar) and on a winding route through the City of London.

Julian pointed out a number of sites we most likely would not have picked out on our own, giving us little nuggets of history for each. For me, some of the highlights were the building where the Beatles did their famous rooftop concert, the tailor shop that served as a front in the movie Kingsman: The Secret Service, the story of Temple Church (which we decided at the time not to go inside, but which I will visit on my next London trip to see the tomb of William Marshal), St. Etheldreda’s Church in Ely Place (dating back to the reign of Edward I), and the Charterhouse. Oh, and an interesting bit of trivia about the origin of the Japanese word for suit.

Julian is an entertaining guide and I believe everyone enjoyed the tours. If you’re ever in London with time to spare, I recommend you contact him about a private walk or a historical pub crawl.

99 City Road

The conference took place on the second floor of Inmarsat’s Old Street office building. Some of us arrived before 8:00 am on the first day and were directed by the security staff to a cozy little waiting area on the first floor. When the time came, we were guided to a side entrance and issued ID cards that would allow us access through the main entrance through Saturday.

As far as I’m aware, the conference went smoothly for just about everyone on site. There were a few hiccups along the way, most of which were noticed by few, if any, of the attendees. For example, those of us early arrivals on the first day found that the power outlets were located under trapdoors scattered throughout the room, but they were out of range of many of the seats. Before it became an issue, Belinda appeared with two of the venue staff, all bearing power strips. Belinda put out most fires before anyone smelled smoke.

Speaking of fires, we started the third day with a test of the building’s fire alarm system. It wasn’t a drill, just a test, so we didn’t have to go anywhere. All was well. Until Steven Schveighoffer got about 75% through his talk. The Stage Engage team edited it out of their recording, but in the live stream, you can see the point where Steve was interrupted by the fire alarm. This time, it was no test. We had to evacuate the building. Several folks got outside and were told to move down the street before abruptly being called back. Five minutes later, everyone filed back into the conference hall and Steve was able to finish his talk.

The venue staff encountered their own minor issue on the first day. At every DConf, we have mid-morning and mid-afternoon snacks, but the coffee is generally available all day. At 99 City Road, they’re used to events with “coffee breaks” in the mid-morning and mid-afternoon, where the coffee is set up and taken down along with the snacks. They soon learned that many DConf attendees are powered by caffeine, so they adapted and left the coffee out all day for the duration of the conference.

The food provided for our lunch each day was fantastic. Speaking for myself, it’s the best food I’ve ever had at a DConf (I did not attend the 2014 and 2015 editions, but I doubt that they compare). It was so good that I went back for seconds each day, which was possible because we had an abundance of food. If we find ourselves at this venue again, the quality of lunch is something we know we don’t have to worry about.

We did encounter one major issue this year, though it did not affect the conference attendees. A number of remote viewers on the first day encountered issues with the live stream, with some unable to see it and others having audio trouble. The venue was using Webex to handle the live stream. Sinisa Poznanovic, the venue’s A/V tech, attempted to switch to YouTube during the lunch break, but the video was oddly being flipped horizontally. He was unable to resolve the issue before the afternoon session, but he promised to stay after we left in the evening until he got it working. When we came in the next morning, the YouTube live stream was set up and working properly. I have to say it was a pleasure working with Sinisa and he has our gratitude for the great work he did throughout the conference.

The Webex issue is something none of us foresaw, but it’s possible we could have. Belinda had sent me a PDF with the links and login information several days before the conference. At the time, I was in Canterbury hanging out with a couple of old friends. I had never heard of Webex, but a cursory search on my phone showed that it’s owned by Cisco. That and the fact that it’s what the venue crew always use were enough to satisfy me, so I searched no further and went back to my vacation. In hindsight, had I dug more deeply into the search results (which I have since done), I would have learned that 64-bit Linux is not officially supported. Had I posted the login details to the forums as soon as I got them from Belinda, those with negative Webex experiences could have spoken up prior to the conference. Such red flags might have motivated us to insist on using YouTube instead.

In the future, we’ll require YouTube for all of our live streaming and, if we encounter anything new, I’ll enlist some help to do more thorough vetting in an effort to uncover potential problems.

The AGM

One of the proposals that came in during the talk submission period was from Nicholas Wilson outlining an Annual General Meeting. When the selection committee met to select this year’s speakers, we decided it would not be feasible to have an AGM as part of the regular schedule. We agreed instead to hold it before the Hackathon.

Just as the DConf Hackathon isn’t the sort of event most people think of when they hear the term, we didn’t envision the AGM as the sort of meeting corporate shareholders would be familiar with. We wanted to limit it to two hours so that we would have time for people to discuss their Hackathon plans before lunch. Nicholas had the idea and put together the agenda, so he would be the moderator. Ethan and I would roam the room with mics so attendees could ask questions. Initially, we had no plans to live stream the event, but in the end, we decided to do it anyway.

The meeting began with an announcement from Andrei. For those who haven’t heard, he is stepping away from his leadership role in the D Language Foundation. He’s still involved in the D community and still manages the foundation’s finances, but for personal reasons, he can no longer devote the time and attention a leadership role requires. Átila Neves was invited to join the team and take over that role. To what I’m certain will be the benefit of the D community, he accepted. It was made possible because Laeeth, his employer, agreed to allow him to do foundation work on Symmetry time.

One of the benefits of DConf is face-to-face communication. Some of the conversations that take place lead to new ideas, collaborations, and projects, but the majority of them are lost to time and memory. In our first AGM, we have not only the benefit of face-to-face communication but also a video record. We covered a lot of ground in the meeting: DUB, DIP 1000, the PR queue, shared, @property, std.experimental, DMD as a library, the DIP process, and more. The ideas put forward are there on video so they won’t be lost. And, thanks to the note-taking skills of Johannes Loher, we have a nice list of action items to work with.

One direct result of the AGM is that I recently revised the DIP process to address some concerns that have been raised in recent times. More items will be ticked off the action list over time.

The quarterly D Language Foundation meeting

The first two quarterly meetings took place over Skype. This time, we were able to hold it face-to-face. Andrei, Walter, Ali, and I were joined on site by representatives from a handful of D shops. We had scheduled the meeting during one of the talks so that we could ensure we’d have a quiet spot for those who were participating remotely. Unfortunately, we had issues with Google Talk for one remote participant while others who were to participate via Skype were too busy to attend.

Skyping these meetings is better than nothing, but meeting face-to-face was a tremendously more efficient and enjoyable experience. We had productive discussions on several topics that garnered more participation than the Skype meetings do, where the discussions tend to be less animated. The company reps aired their issues, we talked about some future plans, and all went well. Most of the items discussed will benefit the community at large when they are finally realized, e.g. Bugzilla issues and new tooling.

I expect our quarterly meetings will become a regular sideline event at future DConfs.

Until next year…

I thoroughly enjoyed myself at DConf this year. Last year, I was too busy emceeing to have much fun. As Ethan discovered this year, there’s more to the emcee job than one might expect (and I would say he’s much more suited to it than I am). When I did it, I was worried about drinking in the evening and wanted to get in bed early each night, so I only fully participated in BeerConf the final night. This year, I had no such concerns, though I did leave early the second night to surprise my wife for dinner.

I hope that everyone at DConf 2019 enjoyed it as much as I did. I also hope that those who were unable to attend this year, especially those who have never attended a DConf, can make the trip next year no matter where in the world we end up. Just think, it’s only been a few weeks since the conference, but we should be talking about DConf 2020 in just five more months.

The countdown is on!

Fuzzing, or fuzz testing, is a powerful method to find hidden bugs in your application. The basic idea is to present random input to your application and monitor how it behaves. If it crashes or shows some other unusual behavior then you have found a bug.

Fuzzing, or fuzz testing, is a powerful method to find hidden bugs in your application. The basic idea is to present random input to your application and monitor how it behaves. If it crashes or shows some other unusual behavior then you have found a bug.

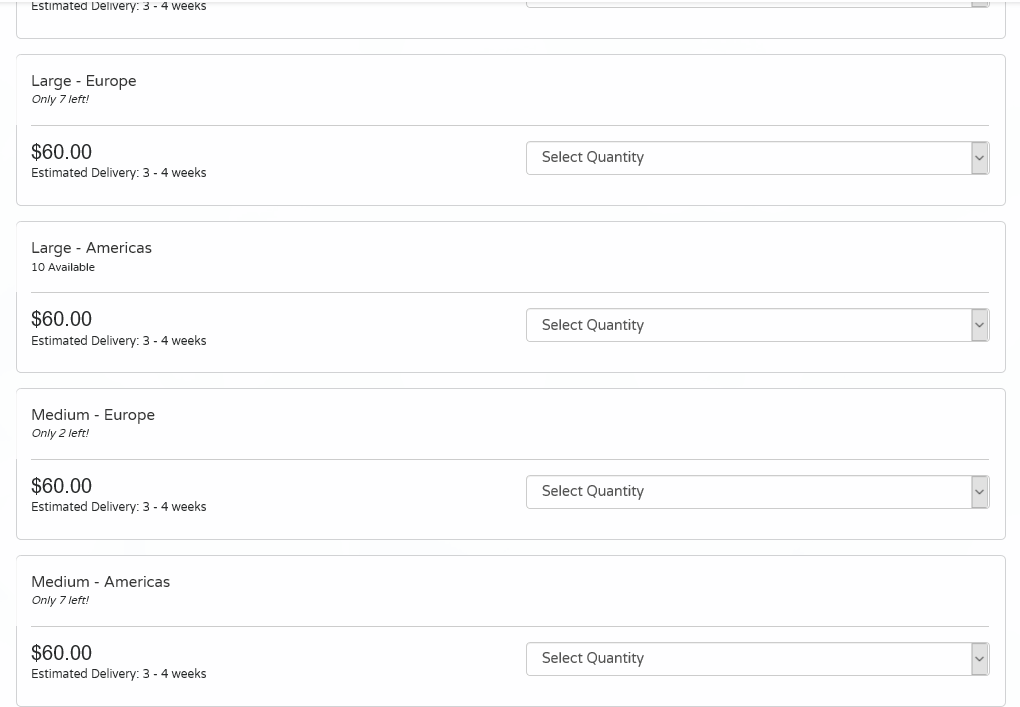

This campaign will help us minimize shipping costs and keep track in real time of the number of shirts remaining. Once the shirts are gone, the campaign is closed. So please, when you make your donation, help us out by selecting the region in which you live if there are still shirts available.

This campaign will help us minimize shipping costs and keep track in real time of the number of shirts remaining. Once the shirts are gone, the campaign is closed. So please, when you make your donation, help us out by selecting the region in which you live if there are still shirts available.

In late November of last year, Laeeth Isharc of

In late November of last year, Laeeth Isharc of